SOMERVILLE, Mass.

It has taken six months, but with this edition, Economic Principals finally makes good on its previously announced intention to move to Substack publishing. What took so long? I can’t blame the pandemic. Better to say it’s complicated. (Substack is an online platform that provides publishing, payment, analytics and design infrastructure to support subscription newsletters.)

EP originated in 1983 as columns in the business section of The Boston Sunday Globe. It appeared there for 18 years, winning a Loeb award in the process. (I had won another Loeb a few years before, at Forbes.) The logic of EP was simple: It zeroed in on economics because Boston was the world capital of the discipline; it emphasized personalities because otherwise the subject was intrinsically dry (hence the punning name). A Tuesday column was soon added, dwelling more on politics, because economic and politics were essentially inseparable in my view.

The New York Times Co. bought The Globe in 1993, for $1.13 billion, took control of it in 1999 after a standstill agreement expired, and, in July 2001, installed a new editor, Martin Baron. On his second morning on the job, Baron instructed the business editor, Peter Mancusi, that EP was no longer permitted to write about politics. I didn’t understand, but tried to comply. I failed to meet expectations, and in January, Baron killed the column. It was clearly within his rights. Metro columnist Mike Barnicle had been cancelled, publisher Benjamin Taylor had been replaced, and editor Matthew Storin, privately maligned for having knuckled under too often to the Boston archdiocese of the Roman Catholic Church, retired. I was small potatoes, but there was something about The Globe’s culture that the NYT Co. didn’t like. I quit the paper and six weeks later moved the column online.

After experimenting with various approaches for a couple of years, I settled on a business model that resembled public radio in the United States – a relative handful of civic-minded subscribers supporting a service otherwise available for free to anyone interested. An annual $50 subscription brought an early (bulldog) edition of the weekly via email on Saturday night. Late Sunday afternoon, the column went up on the Web, where it (and its archive) have been ever since, available to all comers for free.

Only slowly did it occur to me that perhaps I had been obtuse about those “no politics” instructions. In October 1996, five years before they were given, I had raised caustic questions about the encounter for which then U.S. Sen. John Kerry (D.-Mass.) had received a Silver Star in Vietnam 25 years before. Kerry was then running for re-election, I began to suspect that history had something to do with Baron ordering me to steer clear of politics in 2001.

• ••

John Kerry had become well known in the early ‘70s as a decorated Navy war hero who had turned against the Vietnam War. I’d covered the war for two years, 1968-70, traveling widely, first as an enlisted correspondent for Pacific Stars and Stripes, then as a Saigon bureau stringer for Newsweek. I was critical of the premises the war was based on, but not as disparaging of its conduct as was Kerry. I first heard him talk in the autumn of 1970, a few months after he had unsuccessfully challenged the anti-war candidate Rev. Robert Drinan, then the dean of Boston College Law School, for the right to run against the hawkish Philip Philbin in the Democratic primary. Drinan won the nomination and the November election. He was re-elected four times.

As a Navy veteran, I was put off by what I took to be the vainglorious aspects of Kerry’s successive public statements and candidacies, especially in the spring of 1971, when in testimony before the Senate Foreign Relation Committee, he repeated accusations he had made on Meet the Press that thousands of atrocities amounting to war crimes had been committed by U.S. forces in Vietnam. The next day he joined other members of the Vietnam Veterans against the War in throwing medals (but not his own) over a fence at the Pentagon.

In 1972, he tested the waters in three different congressional districts in Massachusetts before deciding to run in one, an election that he lost. He later gained electoral successes in the Bay State, winning the lieutenant governorship on the Michael Dukakis ticket in 1982, and a U.S. Senate seat in 1984, succeeding Paul Tsongas, who had resigned for health reasons. Kerry remained in the Senate until 2013, when he resigned to become secretary of state. [Correction added]

Twenty-five years after his Senate testimony, as a columnist I more than once expressed enthusiasm for the possibility that a liberal Republican – venture capitalist Mitt Romney or Gov. Bill Weld – might defeat Kerry in the 1996 Senate election. (Weld had been a college classmate, though I had not known him.) This was hardly disinterested newspapering, but as a columnist, part of my job was to express opinions.

In the autumn of 1996, the recently re-elected Weld had challenged Kerry’s bid for a third term in the Senate, The campaign brought old memories to life. On Sunday Oct. 6, The Globe published long side-by-side profiles of the candidates, extensively reported by Charles Sennott.

The Kerry story began with an elaborate account of his experiences in Vietnam – the candidate’s first attempt. I believe, since 1971 to tell the story of his war. After Kerry boasted of his service during a debate 10 days later, I became curious about the relatively short time he had spent in Vietnam – four months. I began to research a column. Kerry’s campaign staff put me in touch with Tom Belodeau, a bow gunner on the patrol boat that Kerry had beached after a rocket was fired at it to begin the encounter for which he was recognized with a Silver Star.

Our conversation lasted half an hour. At one point, Belodeau confided, “You know, I shot that guy.” That evening I noticed that the bow gunner played no part in Kerry’s account of the encounter in a New Yorker article by James Carroll in October 1996 – an account that seemed to contradict the medal citation itself. That led me to notice the citation’s unusual language: “[A]n enemy soldier sprang from his position not 10 feet [from the boat] and fled. Without hesitation, Lieutenant (Junior Grade) Kerry leaped ashore, pursued the man behind a hootch and killed him, capturing a B-40 rocket launcher with a round in the chamber.” There are now multiple accounts of what happened that day. Only one of them, the citation, is official, and even it seems to exist in several versions. What is striking is that with the reference to the hootch, the anonymous author uncharacteristically seems to take pains to imply that nobody saw what happened.

The first column (“The War Hero”) ran Tues., Oct. 24. Around that time, a fellow former Swift Boat commander, Edward (Tedd) Ladd, phoned The Globe’s Sennott to offer further details and was immediately passed on to me. Belodeau, a Massachusetts native who was living in Michigan, wanted to avoid further inquiries, I was told. I asked the campaign for an interview with Kerry. His staff promised one, but day after day, failed to deliver. Friday evening arrived and I was left with the draft of column for Sunday Oct. 27 about the citation’s unusual phrase (“Behind the Hootch”). It included a question that eventually came to be seen among friends as an inside joke aimed at other Vietnam vets (including a dear friend who sat five feet away in the newsroom): Had Kerry himself committed a war crime, at least under the terms of his own sweeping indictments of 1971, by dispatching a wounded man behind a structure where what happened couldn’t be seen?

The joke fell flat. War crime? A bad choice of words! The headline? Even worse. Due to the lack of the campaign’s promised response, the column was woolly and wholly devoid of significant new information. It certainly wasn’t the serious accusation that Kerry indignantly denied. Well before the Sunday paper appeared, Kerry’s staff apparently knew what it would say. They organized a Sunday press conference at the Boston Navy Yard, which was attended by various former crew members and the admiral who had presented his medal. There the candidate vigorously defended his conduct and attacked my coverage, especially the implicit wisecrack the second column contained. I didn’t learn about the rally until late that afternoon, when a Globe reporter called me for comment.

I was widely condemned. Fair enough: this was politics, after all, not beanbag. (Caught in the middle, Globe editor Storin played fair throughout with both the campaign and me). The election, less than three weeks away, had been refocused. Kerry won by a wider margin than he might have otherwise. (Kerry’s own version of the events of that week can be found on pp. 223-225 of his autobiography.)

• ••

Without knowing it, I had become, in effect, a charter member of the Swift Boat Veterans for Truth. That was the name of a political organization that surfaced in May 2004 to criticize Kerry, in television advertisements, on the Web, and in a book, Unfit for Command. What I had discovered in 1996 was little more than what everyone learned in 2004 – that some of his fellow sailors disliked Kerry intensely. In conversations with many Swift Boat vets over the year or two after the columns, I learned that many bones of contention existed. But the book about the recent history of economics I was finishing and the online edition of EP that kept me in business were far more important. I was no longer a card-carrying member of a major news organization, so after leaving The Globe I gave the slowly developing Swift Boat story a good leaving alone. I spent the first half of 2004 at the American Academy in Berlin.

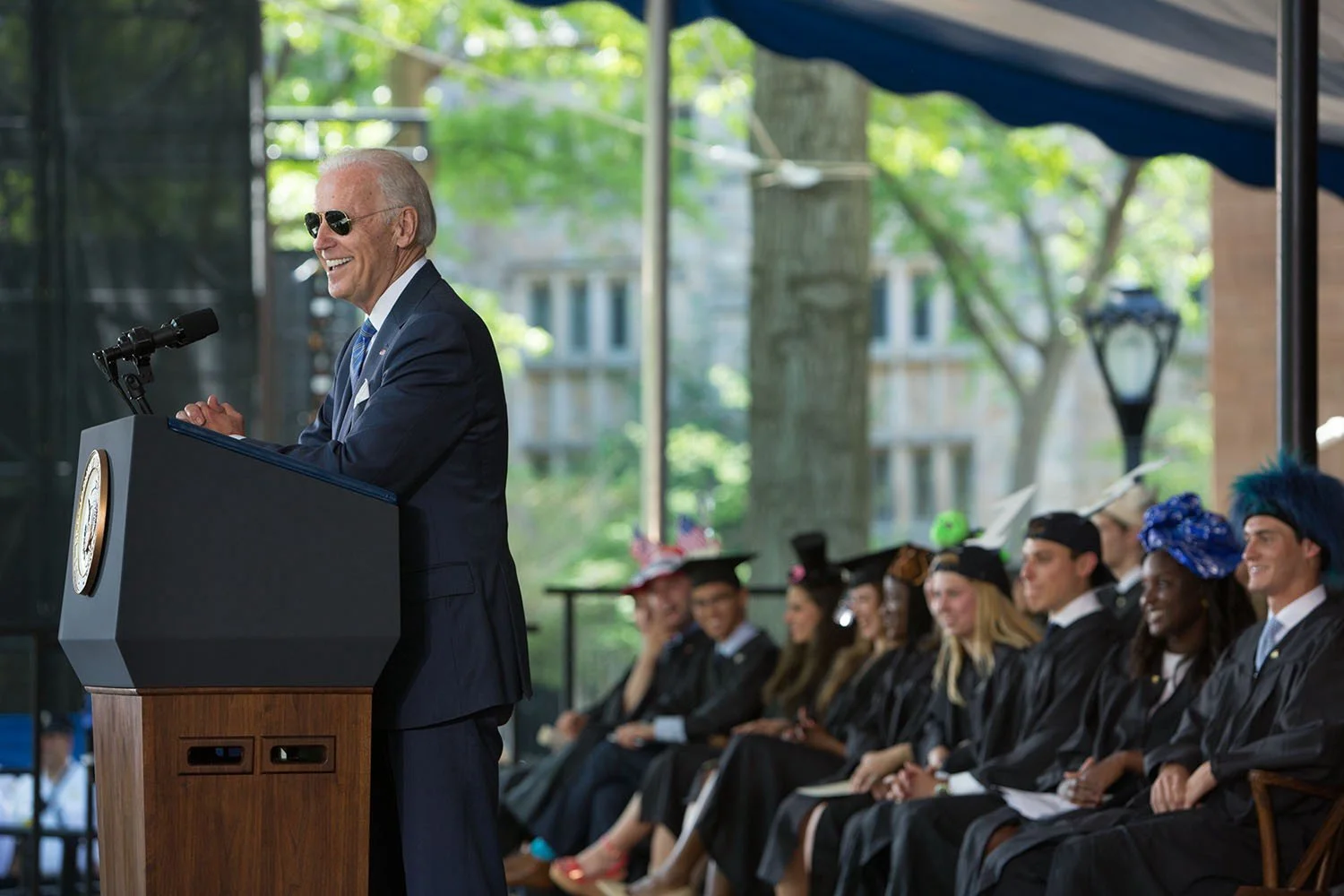

Whatever his venial sins, Kerry redeemed himself thoroughly, it seems to me, by declining to contest the result of the 2004 election, after the vote went against him by a narrow margin of 118,601 votes in Ohio. He served as secretary of state for four years in the Obama administration and was named special presidential envoy for climate change, a Cabinet-level position, by President Biden,

Baron organized The Globe’s Pulitzer Prize-winning Spotlight coverage of Catholic Church secrecy about sexual abuse by priests, and it turned into a world story and a Hollywood film. In 2013 he became editor of The Washington Post and steered a steady course as Amazon founder Jeff Bezos acquired the paper from the Graham family and Donald Trump won the presidency and then lost it. Baron retired in February. He is writing a book about those years.

But in 2003, John F. Kerry: The Complete Biography by the Boston Globe Reporters Who Know Him Best was published by PublicAffairs Books, a well-respected publishing house whose founder, Peter Osnos, had himself been a Vietnam correspondent for The Washington Post. Baron, The Globe’s editor, wrote in a preface, “We determined… that The Boston Globe should be the point of reference for anyone seeking to know John Kerry. No one should discover material about him that we hadn’t identified and vetted first.”

All three authors – Michael Kranish, Brian Mooney, Nina Easton – were skilled newspaper reporters. Their propensity to careful work appears on (nearly) every page. Mooney and Kranish I considered I knew well. But the latter, who was assigned to cover Kerry’s early years, his upbringing, and his combat in Vietnam, never spoke to me in the course of his reporting. The 1996 campaign episode in which I was involved is described in three paragraphs on page 322. The New Yorker profile by James Carroll that prompted my second column isn’t mentioned anywhere in the book; and where the Silver Star citation is quoted (page 104), the phrase that attracted my attention, “behind the hootch,” is replaced by an ellipsis. (An after-action report containing the phrase is quoted on page 102.)

Nor did Baron and I ever speak of the matter. What might he have known about it? He had been appointed night editor of The Times in 1997, last-minute assessor of news not yet fit to print; I don’t know whether he was already serving in that capacity in October 1996, when my Globe columns became part of the Senate election story. I do know he commissioned the project that became the Globe biography in December, 2001, a few weeks before terminating EP.

Kranish today is a national political investigative reporter for The Washington Post. Should I have asked him about his Globe reporting, which seems to me lacking in context? I think not. (I let him know this piece was coming; I hope that eventually we’ll talk privately someday.) But my subject here is how The Globe’s culture changed after NYT Co. acquired the paper, so I believe his incuriosity and that of his editor are facts that speak for themselves.

Baron’s claims of authority in his preface to The Complete Biography by the Boston Globe Reporters Who Know Him Best strike me as having been deliberately dishonest, a calculated attempt to forestall further scrutiny of Kerry’s time in Vietnam. In this Baron’s book failed. It is a far more careful and even-handed account than Tour of Duty: John Kerry and the Vietnam War (Morrow, 2004), historian Douglas Brinkley’s campaign biography. Mooney’s sections on Kerry’s years in Massachusetts politics are especially good. But as the sudden re-appearance of the Vietnam controversy in 2004 demonstrated, The Globe’s account left much on the table.

• ••

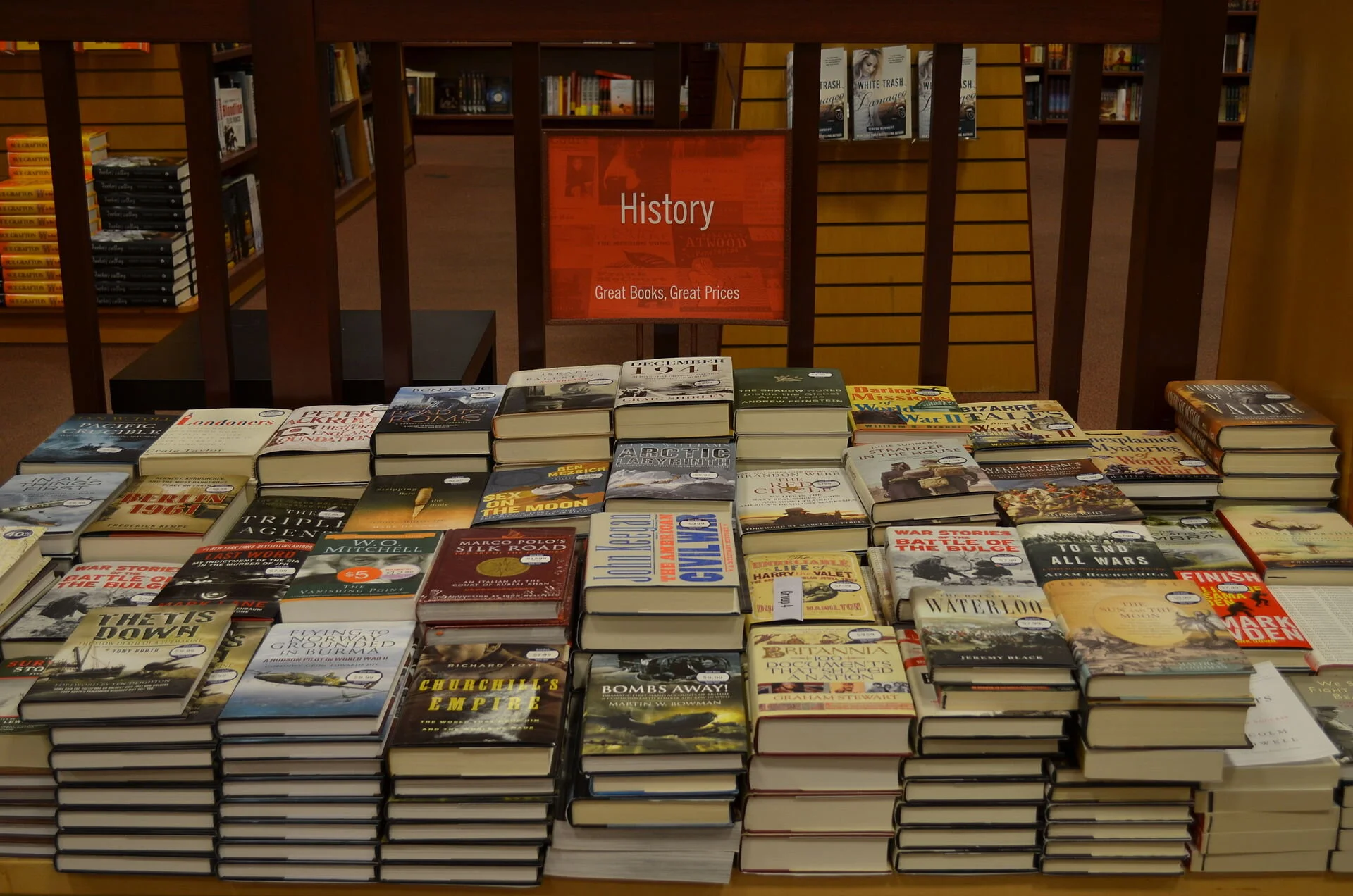

I mention these events now for two reasons. The first is that the Substack publishing platform has created a path that did not exist before to an audience – in this case several audiences – concerned with issues about which I have considerable expertise. The first EP readers were drawn from those who had followed the column in The Globe. Some have fallen away; others have joined. A reliable 300 or so annual Bulldog subscriptions have kept EP afloat.

Today, with a thousand online columns and two books behind me – Knowledge and the Wealth of Nations: A Story of Economic Discovery (Norton, 2006) and Because They Could: The Harvard Russia Scandal (and NATO Expansion) after Twenty-Five Years (CreateSpace, 2018) – and a third book on the way, my reputation as an economic journalist is better-established.

The issues I discuss here today have to do with aspirations to disinterested reporting and open-mindedness in the newspapers I read, and, in some cases, the failure to achieve those lofty goals. I have felt deeply for 25 years about the particular matters described here; I was occasionally tempted to pipe up about them. Until now, the reward of regaining my former life as a newsman by re-entering the discussion never seemed worth the price I expected to pay.

But the success of Substack says to writers like me, “Put up or shut up.” After the challenge it posed dawned in December, I perked up, then hesitated for several months before deciding to leave my comfortable backwater for a lively and growing ecosystem. Newsletter publishing now has certain features in common with the market for national magazines that emerged in the U.S. in the second half of the 19th Century – a mezzanine tier of journalism in which authors compete for readers’ attention. In this case, subscribers participate directly in deciding what will become news.

The other reason has to do with arguments recently spelled out with clarity and subtlety by Jonathan Rauch in The Constitution of Knowledge: A Defense of Truth (Brookings, 2021). Rauch gets the Swift Boat controversy mostly wrong, mixing up his own understanding of it with its interpretation by Donald Trump, but he is absolutely correct about the responsibility of the truth disciplines – science, law, history and journalism – to carefully sort out even the most complicated claims and counter-claims that endlessly strike sparks in the digital media.

Without the places where professionals like experts and editors and peer reviewers organize conversations and compare propositions and assess competence and provide accountability – everywhere from scientific journals to Wikipedia pages – there is no marketplace of ideas; there are only cults warring and splintering and individuals running around making noise.

EP exists mainly to cover economics. This edition has been an uncharacteristically long (re)introduction. My interest in these long-ago matters is strongly felt, but it is a distinctly secondary concern. I expect to return to these topics occasionally, on the order of once a month, until whatever I have left to say has been said: a matter of ten or twelve columns, I imagine, such as I might have written for the Taylor family’s Globe.

As a Stripes correspondent, I knew something about the American war in Vietnam in the late Sixties. As an experienced newspaperman who had been sidelined, I was alert to issues that developed as Kerry mounted his presidential campaign. And as an economic journalist, I became interested in policy-making during the first decade of the 21st Century, especially decisions leading up to the global financial crisis of 2008 and its aftermath. Comments on the weekly bulldogs are disabled. Threads on the Substack site associated with each new column are for bulldog subscriber only. As best I can tell, that page has not begun working yet. I will pay close attention and play comments there by ear.

David Warsh, a veteran columnist and an economic historian, is proprietor of Somerville-based economicprincipals.com, where this essay originated.