In which Practice gets ahead and Theory catches up

You could say, here at the beginning, that this is a story about three Great Depressions – the one that happened in the 20th Century; another that didn’t, in the 21st, and a third, presumptive one that threatens indistinctly sometime in the years ahead.

Most people recognize that Federal Reserve Board chairman Ben Bernanke and his colleagues somehow saved the day in 2008.

Journalists speculate whether he might eventually deserve a Nobel Prize. Indeed, Bernanke and his European colleagues may well deserve the Nobel Prize -- for Peace. The misery that would have ensued around the world had they failed to halt the banking panic in 2008 is hard to imagine. To tell a proper counterfactual story, What-If-Things-Went-Wrong, the imagination of Hilaire Belloc or Philip Roth would be required.

Bernanke didn’t prevent disaster single-handedly, naturally. Mervyn King, governor of the Bank of England, and Jean-Claude Trichet, president of the European Central Bank, played vital roles as well. So did Timothy Geithner, president of the Federal Reserve Bank of New York, U.S. Treasury Secretary Henry Paulson, President George W. Bush, House Speaker Nancy Pelosi and staffers in every department. But theirs are other, different stories. This version is about economists, what they teach their students and how they explain themselves to the rest of us.

But prizes in the economic sciences are awarded not for practical skill or uncommon valor but for advances in fundamental understanding. Such breakthroughs periodically occur, large and small. Not all of them are recognized quickly, some are not recognized at all. This is an account of one such transformation. It is a story about what economists learned from the financial crisis of 2007-08, and how they learned it.

I don’t mean all economists, of course, at least not yet. Mainly I have in mind a small group of specialists who have hung out a shingle under the heading of “financial macroeconomics.” Their place of business is, at the moment, open no longer than a single day in the busy schedule of the annual Summer Institute of the National Bureau of Economic Research, in Cambridge, Mass. Their meeting occurs at the intersection of two scientific traditions previously so independent of one another that they exist mostly in separate institutions – university departments of economics on the one hand, business school finance departments on the other.

A Familiar World Turned Upside Down

Many people think there are no Aha! moments in economics – that somehow everything to know is already known. It is true that deductive economic theories are extensively developed. But when high theory is placed in juxtaposition to practice that has developed in the world itself, surprising new issues for the theory sometimes emerge. The most famous example of this is what happened in 1963, when Ford Foundation program officer Victor Fuchs asked theorist Kenneth Arrow to write about the healthcare industry for the foundation.

It was then (and about the same time, in a paper on the difficulty of managing large organizations) that Arrow unexpectedly surfaced the concerns known mainly to actuaries as “moral hazard” and “adverse selection.” These were fields for mischief and maneuver among human beings that economists previously had overlooked or ignored. They turned out to be enormously important in understanding the way business gets done. Elaborated by Arrow and many others, under the heading of asymmetric information, Arrow’s paper was the beginning of the most important development of economic theory after 1950.

Something similar happened when, in August 2008, a historian of banking Gary Gorton, of Yale University’s School of Management, gave a detailed account to the annual gathering of central bankers in Jackson Hole, Wyo, of an apparently short-lived panic in certain obscure markets for mortgage debt that had occurred in August the year before. Bengt Holmström, of the Massachusetts Institute of Technology, a theorist, discussed Gorton’s 90-page paper. Gorton warned that panic was on-going; it hadn’t ended yet.

“We have known for a long time that the banking system is metamorphosing into an off-balance-sheet and derivatives world,” he told the audience. The 2007 run had started on off-balance-sheet vehicles and led to a scramble for cash. As with earlier panics, “the problem at the root was a lack of information”; the remedy must be more transparency.

In his discussion, Holmström zeroed in on the opposite possibility. Perhaps the information in the market was too much. Sometimes, Holmström observed, opacity, not transparency, was a market’s friend. There is no evidence that the audience had the slightest interest in or understood what the two men had to say – at least that day.

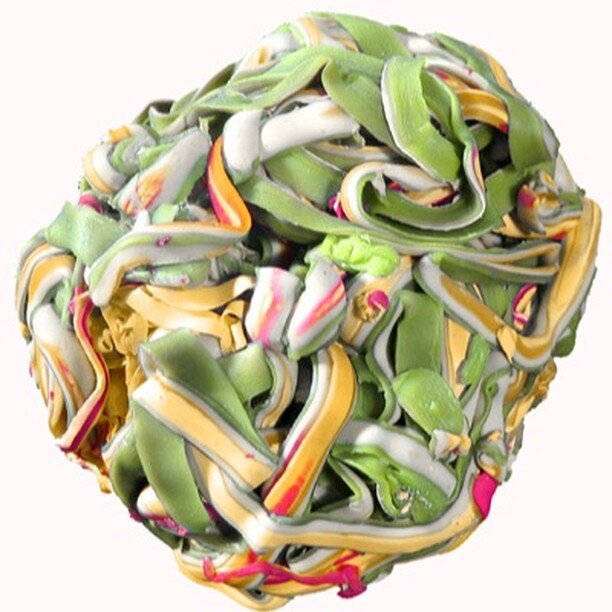

Talking afterwards that day about the characteristics of the long chains of recombinant debt that they had discussed, Gorton and Holmström quickly agreed upon the salience of Holmström’s surmise: it was entirely counterintuitive, but perhaps opacity, not transparency, was intended by the sellers of debt, banks and structured investment vehicles, and welcomed by the institutional investors who were the purchasers, in each case for legitimate reasons. Perhaps the new-fangled asset-backed securities that served as collateral in the wholesale banking system, which soon would be discovered to be at the center of the crisis, had been deliberately designed to be hard to parse, not in order to deceive, but for the opposite reason: to make it more difficult for knowledgeable insiders to take advantage of the less well-informed.

Within days, the intense phase of the crisis had begun. Over the next few weeks, each continued to think through the implications of the conversation they had begun. In a talk to a large gathering at MIT, Holmström introduced a striking example of deliberate opacity: the sealed packets in which the de Beers syndicate sold wholesale diamonds. The idea was to prevent picky buyers from gumming up the sales – the process of adverse selection.

In seminars, Gorton began comparing the panic to what has happened in the past after episodes of E. coli poisoning of, ground beef. Until the beef industry learned to identify where along the line the infection had occurred, and therefore which supply of ground beef to recall, people would stop buying hamburger altogether. Subprime risk, not large in itself, had been spread around the world, inside and outside the banking system. Suddenly the fear of “toxic assets” had become general, and there was no way of recalling the mortgages.

When they met again in December, at a seminar at Columbia University in New York, the two economists, despite differences of background and temperament, agreed to collaborate in writing up what they had gleaned. By the following April, they produced a draft paper, with Tri Vi Dang, a Columbia University lecturer who was enlisted to help. ”Opacity and the Optimality of Debt for Liquidity Provision” began being downloand slowly, all but incomprehensible, say both men.

Since then, Gorton has published three books about the crisis. The first, Slapped By the Invisible Hand: The Panic of 2007 (Oxford, 2010), contains the two papers about the 2008 financial crisis commissioned by Federal Reserve banks, the Jackson Hole one that was written on its eve, another written six months later explaining what had happened to an Atlanta Fed conference held at the Jekyll Island resort where the Federal Reserve had been conceived a hundred years before. The second book, Misunderstanding Financial Crises: Why We Don't See Them Coming (Oxford, 2012) is an expanded version of a talk to the Board of Governors and their academic advisors in May 1010. The third, The Maze of Banking: History, Theory, Crisis (Oxford, 2015), is a collection of twenty papers, ranging from “Clearinghouses and the Origin of Central Banking in the United Ststes,” from1985, to “Questions and Answers about the Financial Crisis,” prepared for the U.S. Financial Crisis Inquiry Commission, in 2010. A summer institute for bank regulators at Yale that Gorton and School of Management deputy dean Andrew Metrick established, with Alfred P. Sloan Foundation funding, has become a focal point for global central bankers.

Two Completely Different Systems

Holmström has been busy, too. The collaboration has continued. That first paper, titled at least for now “Ignorance, Debt, and Financial Crises,''and known informally as “DGH,” is still circulating in draft, nearly complete seven years later but still not registered as a working paper, much less published in a journal. Meanwhile, one or the other of the pair has delivered to seminars a second paper, written with Dang and Gorton’s former student Guillermo Guillermo Ordoñez, more accessible than the first, more than 30 times since 2013. “Banks As Secret Keepers” argues that when banks take deposits (that is, when they produce what on their balance sheets is characterized as debt) and make loans (carried as assets on their balance sheets), which, of course, increasingly they sell (thereby producing debt on other balance sheets) in order make more loans, they labor under a special handicap: they must then keep secret the information they develop about their loans in order that the debt they manufacture can serve depositors/purchasers as money-in-the-bank at face value, without the fluctuations that are taken for granted in equity markets.

Earlier this year, Holmström published a summary of the new work, in plain English, without a model. After years of hesitating, he wrote “Understanding the Role of Debt in the Financial System” for the research conference of the Bank for International Settlements, in Lucerne, in June last year. In January it appeared as a working paper, with a salient discussion by Ernst-Ludwig von Thadden, of the University of Mannheim. Presumably it will eventually become the long-delayed written version of Holmström’s presidential address to the Econometric Society, a set of slides he narrated in Chicago, in January 2012. It describes, I think, an Aha! moment of the first order.

Two fundamentally different financial systems were at work in the world, Holmström he said. Stock markets existed to elicit information for the purpose of efficiently allocating risk. Money markets thrived on suppressing information to preserve the usefulness of bank money used in transactions and as a store of value. Price discovery was the universal rule in one realm; an attitude of “no questions asked” in the other. Deliberate opacity was widespread in the economic world, not just those packets of De Beers diamonds and used car auctions, but routine reliance on overcollateralization in debt markets (especially prominent in the shadow banking system), the preference for coarse bond ratings, the opacity of money itself. Serious mistakes would arise from applying the logic of one system to the other.

He wrote:

"The near-universal calls for pulling the veil off money market instruments and making them transparent reflects a serious misunderstanding of the logic of debt and the operation of money markets. This misunderstanding seems to be rooted in part in the public’s view that a lack of transparency must mean that some shady deals are being covered up. Among economists the mistake is to apply to money markets the logic of stock markets.''

This new view of the role of opacity in banking and debt is truly something new under the sun. One of the oldest forms of derision in finance involves dismissing as clueless those who don’t know the difference between a stock and a bond. Stocks are equity, a share of ownership. Their value fluctuates and may drop to zero, while bonds or bank deposits are a form of debt, an IOU, a promise to repay a fixed amount.

That economists themselves had, until now, missed the more fundamental difference – stocks are designed to be transparent, bonds seek to be opaque -- is humbling, or at least it should be. But the awareness of that difference is also downright exciting to those who do economics for a living, especially the young. Sufficiently surprising is this reversal of the dogma of price discovery that those who have been trained by graduate schools in economics and finance sometimes experience the shift in Copernican terms: a familiar world turned upside down.

Thus significant numbers of other economists have been working along similar lines since the crisis. They include Markus Brunnermeier, of Princeton; Tobias Adrian, of the Federal Reserve Bank of New York; Hyun Song Shin, of Princeton (now seconded to the Bank for International Settlements as its chief economist); Arvind Krisnamurthy, of Stanford’s Graduate School of Business; Metrick, Gorton’s frequent collaborator, of Yale; Atif Mian, of Princeton; and Amir Sufi, of Chicago Booth.. Graduate students and assistant professors are joining the chase in increasing numbers.

Finance as a Technological System.

What are the policy implications of the new work? It is too soon to say much of anything concrete, except that when the new views of banking are assimilated, the ramifications for regulation will be extensive. From the beginning, though, the new ideas suggest a very different interpretation of the financial crisis from the usual ones.

The most common interpretations of the financial crisis deal in condemnation. The problem was the result of greed, or hubris, or recklessness, or politics, or some combination of those culprits. It was the banks, or it was Congress. A favorite is runaway innovation. Paul Volcker famously said, “I wish somebody would give me some shred of evidence linking financial innovation with a benefit to the economy.” The only really useful innovation of the past 25 years? The automated teller machine, according to Volcker. “It really helps people,” he said.

The alternative is to imagine that those who created the global banking system knew what they were doing and did it for good reasons – not just the bankers, but the regulators, rating agencies, the exchanges, insurers, lawyers, information systems and all the rest, They were responding not merely to their own itch to become rich (though certainly they were not unaware of the opportunities) but to legitimate public purposes that arose in growing markets -- to raise money for new businesses, to finance trade, to broaden the market for home ownership, to safeguard retirement income, and so on.

I have come to think of this alternative view as a matter for historians of technology, and the relative handful of journalists who follow them. I was one such during my years in the newspaper business, and although I read many historians who made a deep impression on me – Clifford Yearly, Fernand Braudel, David Landes, Alfred Chandler, Charles Kindleberger – I felt a special admiration for Thomas P. Hughes, of the University of Pennsylvania, whose hallmarks were curiosity about all aspects of what he called the “human-built world” of technologies and devotion to the narrative form.

Hughes began his career as author of a scholarly biography, Elmer Sperry (Johns Hopkins, 1971), inventor of gyroscopic guidance systems, then spent a dozen years writing Networks of Power: Electrification in Western Society 1880-1930 (Johns Hopkins 1983), a comparative study of the creation of electrical grids in three cities (Berlin, London and Chicago) and three regions (the Ruhr, the Lehigh Valley and Tyneside). In 1989 he published American Genesis: A Century of Invention and Technological Enthusiasm (Viking), and went on to write Rescuing Prometheus: Four Monumental Projects that Changed the Modern World (Random House, 1998) – the computer, the intercontinental ballistic missile, the Internet and various macro-engineering projects as exemplified by the highway tunnel under Boston, popularly known as “the Big Dig.” Between times, he edited, with his wife, conference volumes on Lewis Mumford and the history of RAND Corp. He finished with a book of lectures, Human-Built World: How to Think about Technology and Culture (University of Chicago, 2004).

There is very little narrative history like this yet in finance: A foretaste can be found in Surviving Large Losses: Financial Crises, the Middle Class, and the Development of Capital Markets (Harvard, 2007), the work of a trio of distinguished French economic historians: Philip Hoffman, Gilles Postel-Vinay, and Jean-Laurent Rosenthal. With Peter Bernstein, a money manager for many years, certainly contributed his share when he wrote Capital Ideas: The Improbable Origins of Modern Wall Street *Free Press, 1992). Donald MacKenzie, a sociologist at the University of Edinburgh, has made a striking addition with An Engine, Not a Camera: How Financial Models Shape Markets (MIT, 2000).Perhaps a hundred years must pass before the Hughes-like perspective can develop. .

Lacking such a well-informed and long-term view as that of Hughes, I have found it helpful to compare the global financial system that has developed in the last fifty years to another world-wide web that has developed over the same period of time. Building out the banking business can be likened, intuitively at least, to the somewhat similar task of assembling today’s Internet from component industries that were themselves mostly built from scratch – manufacturers of computers, semiconductor chips, networking and switching equipment, transmission cables, and, of course, the zillions of lines of software code necessary to operate and connect it all. The Internet Engineering Task Force and the Bank for International Settlements are hardly household names, but they share a common distinction: they presided over prodigious feats of engineering built in a hurry, entirely by practitioners, without much in the way of blueprints. I’ve also found it helpful to consult The Global Financial System: A Functional Perspective (Harvard Business School,1995), edited by Dwight Crane, a deserving book which disappeared from view after the hedge fund Long Term Capital Management, went off the rails, in 1998. Fund partner Robert Merton, a Nobel laureate, was the book’s principal author.

Money markets are different from markets for wheat, or land, or airplanes, or pharmaceuticals, or art, everything and anything that isn’t money – that is the whole point of our story. They are opaque, designed to be taken at face value. Who wants to argue about the value of a $100 bill? The opacity that typically cloaks money dealings provides opportunities galore for cupidity. Everybody has a favorite villain: high-frequency traders, auction riggers, short-sellers, self-dealing executives.

Compared to the scope of the flow of money and money-like instruments, however, these are probably minor diversions. The new global financial system has created trustworthy retirement systems around much of the world, facilitated the vast expansion of global trade that, during the second half of the 20th Century, contributed to the lifting of billions of persons out of poverty and ended the Cold War on peaceful terms. It has turned out to be surprisingly robust.

The basic story here concerns the financial crisis of 2007-08, the appearance six months later of ”Opacity and the Optimality of Debt for Liquidity Provision,” and subsequent events. The novelty of the findings argue for taking a longer approach than usual to the telling of it. I will set out the context in which these events occurred as best I can over the next 14 weeks. That means covering a lot of territory. As I say, the implications for central bankers and the banking industry are largely unfathomed. But it is clear that the restructuring of macroeconomics already has begun.

Getting Out of Economic Trouble vs. Getting In

So much changed in the economics profession during the 20th Century that it is easy to forget that its grand narrative, the subdiscipline known as macroeconomics, is only 85 years old. It arose in the 1930s as an argument about the Great Depression, and it basically has remained one ever since. Economics itself – that is, the broad swathe known mainly as microeconomics – has become a giant technology, embedded in markets, management and law, rivaling physics, chemistry or medicine in the extent to which know-how originating in its domain has become part of the fabric of the economy itself. Since 1969, the economic sciences have even had a Nobel Prize.

Yet all that time macro has consisted mainly of unsettled arguments about the ’30s. In the ’50s and ’60s, Keynesians argued with monetarists. As they debated inflation in the ’70s and ’80s, they repackaged themselves as “saltwater” and “freshwater” economists (or “new” Keynesians and “new” classicals).But the differences of opinion between them remained mostly has they had been before. As Robert Lucas, of the University of Chicago has put it, the Keynesian half of the profession was preoccupied with how the industrial economies of the world had gotten out of the Great Depression; the monetarist half with how it had gotten in.

Even now, it is hard to overstate the impact of the 1930s. For the generation that lived through that decade, the Depression meant privation and a pervasive sense of helplessness against the threat of loss: of a home, a job, a family, a fortune. The Depression precipitated the accession to power of the Nazis in Germany, solidified the control of Stalin in the Soviet Union, and reinforced the resentments and insecurities that led to World War II. For the generation that came of age after the war, the emphasis was on preventing a recurrence. Throughout the‘70s, intensifying as the 50th anniversary of the Crash of October 1929 approached, the Great Depression remained a central preoccupation. Could it happen again?

Keynesians put their namesake, John Maynard Keynes, at the center of their account. The circumstances that brought about the Great Depression itself had been an accident, a one-up event stemming from a series of historic mistakes: the huge reparations forced on Germany after World War I; draconian applications of the gold standard; a stock market bubble; a disastrous tariff passed by Congress in the heat of the crisis that added to the downward pressure; the decline of the British Empire, and the refusal of the U.S. to step up to its new global role.

The net effect, Keynesians say, was that the world economy became stuck in a “down” position, a “liquidity trap” from which there would be no automatic escape without government action. Interest rates had fallen so low that monetary policy had become powerless to stimulate the economy. Easing bank reserve requirements would be like “pushing on a string.” “Pump-priming” was required instead: government spending to take up the slack in private flows.

Monetarists sometimes put another man at the core of their account – a man who wasn’t there. Benjamin Strong, president of the powerful Federal Reserve Bank of New York, a former J.P. Morgan partner and the man who understood the powers of the system best. Strong died in 1928, just as the Federal Reserve System was beginning an ill-advised attempt to rein in on a speculative bubble in stocks. This tightening produced the 1929 Crash. His successors – especially the Board of Governors in Washington, D.C. -- then converted what might have been an ordinary recession into a Great Depression by ineptitude and neglect.

Instead of acting to counter a series of panics, the Fed tightened further after 1929, tipping recession into depression and adding to the sense of general helplessness – the “Great Contraction,” Milton Friedman and Anna Schwartz later called it. Central bankers made crucial mistakes in this account; Franklin Delano Roosevelt halted the panic they started, chiefly by declaring a bank holiday once he took office and by taking the United States off the gold standard.

For an up-close and highly personal look at this debate over 85 years, see the two books of interviews by Randall Parker, of East Carolina University: Reflections on the Great Depression (Elgar, 2002) and The Economics of the Great Depression: A Twenty-First Century Look Back(Elgar, 2007). The first volume consists of interviews with economists who lived through the Great Depression as young economists; the second, with economists who could have been their children and grandchildren. The contrast is striking. The voices of the first generation vividly convey the experience of desperate uncertainty and despair. Those of their students reflect so such urgency.

The crisis of 2008 pretty much resolved the question, at least at the most basic level, in favor of the monetarists -- economists who saw the history of the banking industry and the operations of the central bank as paramount, at least in the case of 2008, and perhaps in the early 1930s as well.

That is to say, Milton Friedman won the argument. By presenting a situation in which a second depression even worse that the first might have occurred had the bankers failed to act (and their respective treasuries failed to support them), the episode demonstrated the centrality of government’s role as steward of the economy and, in a crisis, as lender of last resort. That doesn’t disprove Keynesian arguments about the efficacy of economic stimulus so much as emphasize how non-parallel the argument has been. If the disinflation of the ’80s didn’t convince you that central bank policy was, well, central to economic performance, then the crisis of 2008 should have made up your mind. Money and banking matter.

On the other hand, if you believe, as many monetarists do, that a simple rule governing the expansion and contraction of the supply of money is all that is required to achieve tranquility, then probably you lost. The Keynesian Paul Samuelson, and all the others of his ilk, won this debate, at least if you side with Ben Bernanke. He put it this way at an IMF conference in April, “I am perfectly fine using such [monetary] rules as one of many guides for thinking about policy, and I agree that policy should be as transparent and systematic as possible. But I am also sure that, in a complex, ever-changing economy, monetary policymaking cannot be trusted to a simple instrument rule.” Freshwater economists, especially theorists of the Minnesota school and its analysis of “real business cycles,” were relegated to the fringe.

In short, monetarists won the battle of what macroeconomics is about; Keynesians won the argument of how it would be done. Both sides lost their advantage in the continuing contest for the discipline’s commanding heights.

Macroeconomics changed, decisively, with the financial crisis of 2008. The narrative now has three key episodes instead of one: the Panic of 1907, which led to the creation of the Fed, in 1912; the Contractions of 29-33 and ’37-38, which, taken together, produced a decade of depression; and the crisis of 2008. All the ups and downs between 1945 and 2007 pale into insignificance – even, for present purposes, the disinflation that followed the recession of 1980-82. Banks and the central banks that regulate their conduct are once again at center stage. Financial macro points the way to a New Macro – one better suited to deal with a changing world.

Thinking Caps that Come with Blinders

I am a journalist, a newspaperman by training, not an economist or a historian of banking, but I know my way around. I have covered the field for 40 years and, or better or worse, I am long past the point where I report solely what economists think. I remain very interested in their opinions, but now often I express opinions of my own. I had the advantage of a good undergraduate education in the Social Studies program at Harvard College before I started, and the further advantage of knowing Charles P. Kindleberger pretty well in his retirement years. I am also of the generation for whom the historian of science Thomas Kuhn looms large.

More than 50 years have passed since The Structure of Scientific Revolutions appeared, in 1962. A forbidding thicket of literature now surrounds the book. Philosophers of science have resumed their battles with historians. Indeed, if you only read the introduction to the anniversary new edition, by philosopher Ian Hacking, you might wonder what the fuss had been about (“The Cold War is long over and physics is no longer where the action is”).

To most of us, scientists and non-scientists alike, Kuhn’s little book seems as illuminating as ever, a guidebook to the social institutions and their animating values that make the sciences, including many social sciences, significantly different from everything else – experiments, proof, journals, textbooks, graduate education, university departments, and so on. You cannot understand the internal workings of a science, including economics, without it.

Two facets of Kuhn’s work are particularly relevant here. One has to do with the term he turned into a household word. Before Kuhn appropriated it to designate what he thought was special about science, “paradigm” meant nothing more than the models we use, all but unconsciously, to illustrate the right way to do things with language – how to conjugate a particular verb, for instance: we do, we did, we have done. That’s a paradigm. There was a certain imprecision about Kuhn’s use of the word right from the start. In fact, soon after the book appeared, linguist Margaret Masterman identified 21 slightly different senses in which Kuhn had employed the term.

A paradigm was a classic work; an agreed-upon achievement; a whole scientific tradition based on an achievement; an analogy; an illustration; a source of tools, of instruments; a set of political institutions; an organizing principle; a way of seeing. Over time, the preferred meanings boiled down to two: a paradigm is a foundational book, or a famous article, a source of “revolution” in the way a question is conceived. It is also the way of seeing a particular set of problems that such a work conveys.

Some of these foundational works were ancient: Aristotle’s Physica, Ptolemy’s Almagest. Others were more recent: Newton’s Principia and Opticks; Franklin’s Electricity; Lavoisier’s Chemistry; Lyell’s Geology. These and many other similar works “served for a time implicitly to define the legitimate problems and methods of a research field for succeeding generations of practitioners,” wrote Kuhn. (He didn’t mentionWealth of Nations, which appeared about the same time as Electricity and Chemistry, while Geology appeared 50 years later.

Around these literary crossroads grew up communities of inquiry characterized by what Kuhn called “normal science,” habit-governed, puzzle-solving modes of inquiry which, when they were successful, led to further results and, at least among practitioners, a shared sense of “getting ahead.” How did such works attract would-be scientists, even before the theorizing had begun? Masterman and Kuhn agreed that embedded in these classic works were instances of paradigms themselves: first steps, pre-analytic ways of seeing, intuitive senses of how things must work. Paradigms were “what you use when the theory isn’t there,” said Masterman. They were concrete pictures of one thing used analogically to reason out the workings of another, she added. They were “thinking caps,” said Kuhn.

An important property of paradigms is that the thinking cap, when you tug it on, seems to include a set of blinders. Kuhn experimented briefly with the word “dogma” to capture this elusive property. He dwelt for a time on the famous image of gestalt switches, of ambiguous figures that could be interpreted one way or another, to illustrate what he meant by “way of seeing” – although once the flip occurs in science, he argued, it doesn’t really flip back. When he had settled on the carefully neutral term paradigm to connote the thinking cap, he needed a different term to describe the effect of the flip and the blinders. From mathematics, he borrowed the word incommensurable, meaning “ no common measure.” Among mathematicians, proof that the square root of two cannot be written as a simple fraction – in other words that it is an irrational number – is a favorite illustration. The concept is 4,000 years old.

This quality of dogmatism, performed with restraint, is what defines a paradigm and makes science different from most other pursuits of truth and beauty: engineering, law, literature, history, philosophy, practically everything but religion (whose dogmatism often takes a more ferocious turn). Art has many traditions, and an artist isn’t compelled to choose. A historian might feel strongly about one interpretation of events yet recognize that reasonable persons might credit another. Lawyers exist to disagree. But scientists, interested only in what they can prove, can embrace only one paradigm at a time. If this is true, it can’t be that. Within a scientific community, there is no such thing as agreeing to disagree. There is only the shared commitment to continue the quest to pin down the matter.

This is what Kuhn means when he wrote that “there are losses as well as gains in scientific revolutions, and scientists tend to be particularly blind to the former.” Paul Krugman’s famous parable of mapping Africa is an illustration of the incommensurability of approaches to knowledge that differ. When geographers committed to modern surveying techniques began their work in the 19th Century, they ignored accumulated folk knowledge of the landscape – some that was false, but much that was true – in favor of mapping that they had done themselves. The interior of traditional maps of Africa emptied out. Knowledge of local features was lost, in some instances for considerable periods of time, until the hinterlands could be explored by scientific expeditions. A previously tolerably-well-known continent became for a few decades “darkest Africa” – just long enough, as cynic might think, for other modern methods to carve it up along colonial lines.

Blinders – scientific dogma, if you prefer – routinely operate wherever science is brought to bear, in matters large and small. Often the binders are acknowledged. The technical term for leaving out what you aren’t ready to explain is to describe it as “exogenous” to your model, meaning determined by forces outside its scope. The more colloquial term is to say that you will bracket that which you don’t understand well enough to explain, meaning that you may note its presence by putting it in [brackets], but otherwise pretty much ignore it, for now.

A tendency to barrel ahead full-speed with blinders on is, I think, the natural condition of science, at least insofar as the science in question is removed from public policy. Natural scientists are generally pretty good at recognizing the limits of their authority; economists perhaps a little less so. Certainly the role of scientific dogma is helpful in understanding how economics got the way it is today. It’s my license as a journalist to speculate on matters that are at the heart of this story. Why do we have banks? Why do we have central banks? And why, until recently, have economists tended to leave them out?

Practice and Theory

The other aspect of Thomas Kuhn’s thought that has been especially helpful over the years has been his interest in the relationship among scientists, technologists, and practitioners, or craftsmen, as he called them. Long ago I began accepting economists’ claims to the mantle of science. Those claims left a great many non-economist expositors of knowledge out of the spotlight. They invited plenty of resentment, too. Kuhn had written “scorn for inventors shows repeatedly in the literature of science, and hostility to the pretentious, abstract, wool-gathering scientist is a persistent theme in the literature of technology,”

Certainly that has been my experience. I’ve spent my career in borderlands where experts of all kinds mix and mingle. As a newspaperman I talked to business economists one day and university economists the next; to business school professors, accountants, strategists, and historians; to political scientists, sociologists, economic anthropologists; and, of course, to practitioners of all sorts – entrepreneurs, bankers, business executives, venture capitalists, policy analysts and policymakers. Where wisdom was concerned, there was no clear-cut hierarchy among them – among, for example, Paul Samuelson, of MIT, John Bogle, of Vanguard Group, Warren Buffett, of Berkshire Hathaway, and former Treasury Secretary Nicholas Brady; or among Milton Friedman, of the University of Chicago, Leo Melamed, of the Chicago Mercantile Exchange, David Booth, of Dimensional Fund Advisers, and former Fed chairman Paul Volcker. Each knew a lot about some things and, often, very little about others. None knew about all.

What could be said about the nature of the relationship? Kuhn described three broad paths by which knowledge came into the world. In some matters, practitioners had become sovereign long ago. Scientists who studied what craftsmen had already learned to do sometimes found they could explain much but add little, Kuhn wrote. When the astronomer Johannes Kepler sought to discover the proportions that would enable casks to hold the most wine with the least wood, he helped invent the calculus of variation, but he made no contribution to the barrel industry. Thanks to plenty of trial and error, coopers were already building to the specifications he derived.

Inventors dominated developments in other realms, often for long periods of time. These were tinkerers who might borrow methods from science, experiment in particular, but who otherwise would give little back. Plant hybridizers and animal breeders were in this category. James Watt and the many others who developed the steam engine and adapted it to use are good examples. Many improvements to heat engines were made – enough to interest the French physicist Sadi Carnot in 1824 in their theoretical limits of efficiency. The field of thermodynamics emerged from his calculations and from those of Rudolph Clausius and William Thomson, Baron Kelvin, but not in time to deliver anything more than marginal improvements to steam engines, as locomotive designers got getting better apace. Instead thermodynamics led, in concert with developments in electromagnetism and statistical mechanics, to the quantum revolution.

A third mode of interaction, in which scientists preside over a cornucopia of new products and processes derived from purposeful research, is the more familiar one today. This one got its start in the German dye industry, with the beginnings of organic chemistry in the1840s; before long, Faraday’s experiments with current electricity had become Maxwell’s electromagnetic field theory. Since then, Kuhn wrote, basic science had “transformed communications, the generation and distribution of power (twice), the materials. both of industry and of everyday life, also medicine and warfare.”

As a physicist with a special interest in the history of electromagnetism, Kuhn was well aware that this had been anything but a linear process. Practice often ran ahead of science in the nineteenth century. Ingenious technologists like Thomas Edison, Nikola Tesla, George Westinghouse, Charles Steinmetz, and Alexander Graham Bell raced well ahead. But it was only after Heinrich Hertz broadcast and received radio waves in his laboratory, demonstrating the existence of the electromagnetic spectrum, which James Clerk Maxwell had described 25 years before, that Guglielmo Marconi and Alexander Popov began seeking practical applications. Soon Max Planck and Albert Einstein put physics decisively ahead. Practice before theory, then better theory, and better practice based on theory. In his Autobiography, Andrew Carnegie crowed that while other steel companies felt they couldn’t afford to employ a chemist, he couldn’t afford to be without one, so great was scientists’ assistance in identifying the best ores and most efficient processes. One result of these forever shifting salients of practice, technology and science, especially in the domains of the fledgling social sciences, is that highly experienced generalists or expositors of approaches considered to have been swept aside sometimes possess better understandings of particular situations than do highly trained experts. Richard Feynman, the great physicist, put it this way: “A very great deal more truth can become known than can be proved.”

Kuhn was a cautious man. He had little to say about economics, because the job of getting philosophers and physical scientists to rethink their prior convictions was hard enough. But as a scion of the family that built the investment banking firm of Kuhn, Loeb & Co, he would agree, I would like to think, that all three modes of knowing were present in economic life. I like to think, too, that he would have agreed that John Stuart Mill had the basic history of economic science right when he wrote in the first sentences of Principles of Political Economy in 1848,

In every department of human affairs, Practice long precedes Science: systematic inquiry into the modes of action of the powers of nature is the tardy product of a long course of efforts to use those powers for practical ends. The conception, accordingly, of Political Economy as a branch of science is extremely modern; but the subject with which its enquiries are conversant has in all ages necessarily constituted one of the chief practical interests of mankind, and, in some, a most unduly engrossing one. That subject is Wealth.

Mill wrote at a time when economics and finance were more or less the same thing. Twenty-five years later, the situation was beginning to change dramatically, with economics going in one direction, finance in another. Surveying the situation, the banker and journalist Walter Bagehot wrote, “Political economy is an abstract science which labors under a special hardship. Those who are conversant with its abstractions are usually without a true contact with its facts; those who are in true contact with its facts have usually little sympathy with and little cognizance of its abstractions.” The gap has only gotten greater since then.

And that’s where Gorton and Holmström come in. Kenneth Arrow, a brilliant theorist, recognized the potential significance of asymmetric information because he had studied to become an actuary as a young man. But neither Gorton nor Holmström knew enough by himself to spot the significance of opacity single-handed, much less had the capability to slowly map it into the consensus of technical economics at a high level.

Had Gorton not studied banking history for 25 years, so that he knew that new forms of bank money regularly are developed and recognized a panic when he found himself in its midst; had Holmström not studied organizations and information economics for 25 years; and had both not had considerable experience themselves in markets: they could not have made their contribution. The story of their collaboration, set against the 20th Century economics that they learned in graduate school, makes an interesting tale.

So let’s say, here at the beginning, that this is a story about practice and theory and more practice and better theory in economics. It is a story about banks and central banks and why we have them. It’s a story about the relationship between the growth of knowledge and economic growth. It is, in other words, a history of economics since 2008.

David Warsh, a longtime financial journalist and an economic historian, is proprietor of economicprincipals.com